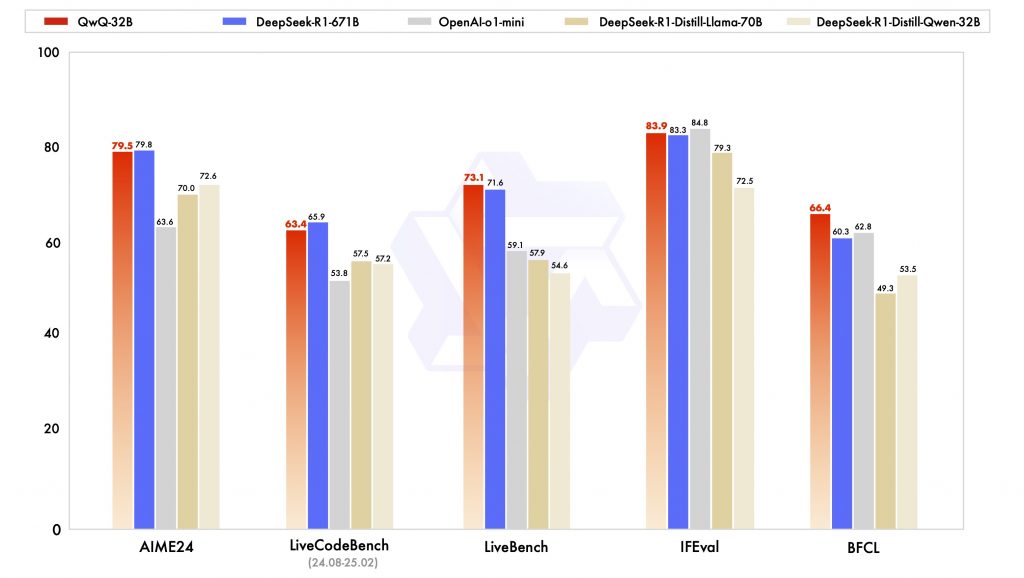

In an exciting development in the technology sector, Alibaba has recently showcased its new QwQ-32B AI model, claiming it surpasses the capabilities of competitors such as DeepSeek’s R1 and OpenAI in coding and problem-solving tasks, all while requiring fewer computational resources. This groundbreaking announcement has not only positioned Alibaba at the forefront of AI innovation but has also led to a significant surge in its stock prices, with shares rising by nearly 8.4% on Thursday, closing at HK$140.80.

Understanding the QwQ-32B AI Model

Source: Qwen

The QwQ-32B is a state-of-the-art AI model that boasts a relatively modest size of 32 billion parameters. This count is particularly noteworthy when compared to DeepSeek’s R1, which features a staggering 671 billion parameters. Despite its smaller parameter size, the QwQ-32B has excelled in various tasks, particularly in:

- Mathematics

- Coding

- General problem-solving

Such performance has sparked interest in the implications of leveraging smaller AI models that require fewer resources, which could make them more accessible to a broader range of users and applications.

Benefits of a Smaller Parameter Count

The Alibaba team emphasizes the advantages of a smaller parameter count, underlining that:

- Reduced computation requirements make it easier to deploy the model across various platforms and devices.

- Wider adoption potential in industries that rely on AI without needing extensive infrastructure.

- Enhanced energy efficiency, contributing to a lower carbon footprint for AI applications.

These attributes position the QwQ-32B not only as a high-performing model but also as a potentially sustainable option for future AI developments.

The Context of the AI Landscape

The launch of the QwQ-32B comes on the heels of a major upheaval in the global tech landscape caused by DeepSeek’s R1, which set new expectations for AI capabilities. This rivalry among industry leaders has undoubtedly chosen Alibaba to escalate its efforts in AI research and development.

Recent Success of DeepSeek’s R1

For context, DeepSeek’s R1 made headlines recently owing to its high parameter count and the subsequent implications for AI performance. The advent of such models initially raised concerns that smaller models like Alibaba’s QwQ-32B might be overshadowed. However, the recent comparison highlights a shifting paradigm in AI, where efficiency and performance can go hand-in-hand.

The Future of AI: What Lies Ahead

As more companies invest in AI technologies, the competition is expected to intensify. Alibaba’s QwQ-32B stands as a testament to the potential of smaller models that can still challenge giants like DeepSeek and OpenAI. The implications for industries relying on AI are profound.

Key Predictions for AI Development

Experts predict several emerging trends in the AI landscape:

- Focus on Lightweight Models: The success of QwQ-32B could lead to increased interest in smaller models that balance performance and resource requirements.

- Enhancements in Efficiency: Companies will increasingly prioritize models that offer high output with lower environmental impact.

- Collaboration Over Competition: As the industry matures, more partnerships may emerge, enabling various companies to leverage each other’s strengths.

Conclusion

Overall, Alibaba’s QwQ-32B model represents a significant leap forward in the AI race, underscoring that size does not always correlate with capability. As the tech community eagerly watches the developments, the future may hold exciting possibilities where innovative solutions emerge from the unassuming corners of the tech world. With emphasizes on open-source technology, Alibaba’s initiative could usher in a new era of accessible AI, transforming how industries utilize artificial intelligence.

For those interested in understanding the evolving landscape of AI technologies, the ongoing competition and innovations from players like Alibaba, DeepSeek, and OpenAI offer valuable insights into where technology might head in the future.