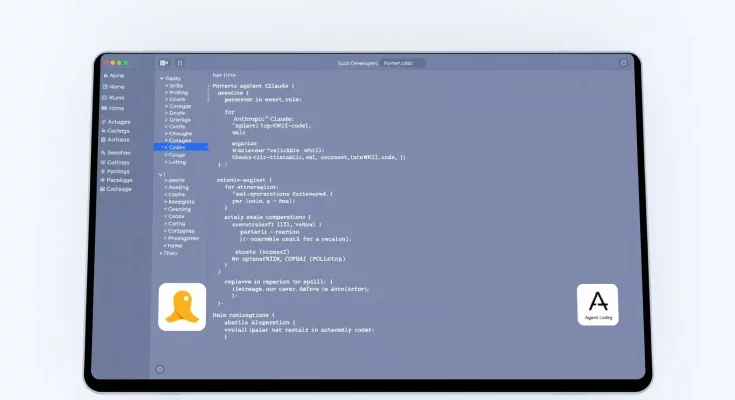

The Next Frontier: Xcode 26.3 and Agentic Coding

In a move that signals a massive shift in how software is created, Apple has officially introduced agentic coding capabilities to its flagship development environment with the release of Xcode 26.3. While the industry has spent the last few years acclimating to AI-powered autocomplete and chat-based assistants, Apple is now pushing the boundaries by integrating autonomous AI agents from industry leaders Anthropic and OpenAI directly into the IDE.

This update transforms Xcode from a passive code editor into an active collaborator. For developers, this means the ability to delegate entire workflows—from initial architectural planning to unit testing and debugging—to sophisticated models like Anthropic’s Claude Agent and OpenAI’s Codex. The shift from “assistance” to “agency” is not just a semantic one; it represents a fundamental change in developer productivity and the democratization of app creation.

Choosing Your Engine: Claude and Codex Integration

One of the most notable aspects of Xcode 26.3 is Apple’s decision to offer developers a choice between the world’s most advanced large language models (LLMs). By partnering with both Anthropic and OpenAI, Apple ensures that developers can leverage the specific strengths of each model within their unique projects.

Anthropic’s Claude Agent and MCP

Anthropic’s integration is particularly significant due to its support for the Model Context Protocol (MCP). This open standard allows the Claude Agent to securely access local project data, documentation, and external tools without the need for custom integrations for every new feature. In practice, this means Claude can understand the entire context of a complex iOS project, making it exceptionally good at refactoring code and managing large-scale migrations across different versions of Swift.

OpenAI’s Codex and Agentic Action

On the other side of the aisle, OpenAI’s Codex brings a high level of agentic autonomy to the IDE. Unlike the chat-only interfaces of the past, these agents can now take action. They can search the web for documentation, run builds, interpret error logs, and even iterate on a UI design in real-time. This capability pairs seamlessly with the upcoming Gemini-powered Siri upgrades, creating a cohesive ecosystem where AI understands the user’s intent across both consumer and developer platforms.

The Rise of “Vibe Coding”

The developer community has recently coined the term “vibe coding” to describe a new style of software development where the “coder” provides high-level natural language instructions while the AI agent handles the technical implementation. With Xcode 26.3, vibe coding has officially arrived on the Apple platform.

- Natural Language Blueprints: Developers can describe a feature—such as “create a weather dashboard with a 7-day forecast using SwiftUI and the WeatherKit API”—and the agent will generate the files, set up the data models, and write the view logic.

- Iterative Refinement: If the initial “vibe” isn’t quite right, the developer can simply say, “Make the icons more rounded and add a blur effect to the background,” and the agent updates the code instantly.

- Autonomous Debugging: When a build fails, the agents don’t just point out the error; they can autonomously investigate the stack trace, propose a fix, and run a test build to verify it.

This approach significantly lowers the barrier to entry for new developers while allowing seasoned engineers to focus on high-level architecture rather than repetitive boilerplate code.

Market Statistics and the Productivity Boom

The push for agentic coding is backed by staggering growth in the AI developer tool sector. Recent data suggests that the AI code assistant market is valued at approximately $4.7 billion in 2025 and is projected to surge to over $14.6 billion by 2033, growing at a CAGR of 15.31%.

Moreover, as of late 2025, approximately 72% of professional developers reported leveraging some form of AI coding assistant in their daily workflows. The transition to agentic models in 2026 is expected to push this number even higher, as agents move beyond simple suggestions to handling 100% of certain development tasks. This evolution is a direct response to the “productivity paradox” in software engineering, where the complexity of modern apps has historically outpaced the speed of manual human coding.

Security and Private Cloud Compute

Apple has consistently prioritized privacy, and the integration of third-party agents into Xcode is no exception. These agents operate within a framework that prioritizes Private Cloud Compute. This ensures that even when a request is sent to an OpenAI or Anthropic server, the data remains cryptographically secure and inaccessible to the model providers themselves for training purposes.

For enterprise developers, this is a critical differentiator. The ability to use state-of-the-art models without risking intellectual property is essential for companies building proprietary software. By providing a secure bridge between local project files and cloud-based intelligence, Apple maintains its reputation as the most secure platform for professional creators.

What This Means for the Future of Apps

The integration of Anthropic and OpenAI agents into Apple‘s developer tools marks the end of the “assistance” era and the beginning of the “agency” era. We are moving toward a future where the role of the developer is increasingly about oversight, ethics, and user experience design rather than syntax and semi-colons.

As these tools become more sophisticated, we can expect to see a surge in high-quality, specialized apps created by smaller teams. The time from “concept to App Store” is likely to shrink from months to weeks, or even days. While there is ongoing debate about the long-term impact on junior developer roles, the current sentiment is one of empowerment. Developers are no longer just builders; they are architects of intelligent systems, supported by the most powerful agents ever built. This shift is just as impactful as the introduction of agentic vision in other AI models, proving that the future of technology is not just about seeing the world, but actively building it.