The Visual Challenge in Scientific Publishing

In the rapidly evolving world of artificial intelligence, researchers are increasingly offloading labor-intensive tasks to automated systems. While large language models (LLMs) can now assist with literature reviews, experimental design, and even code generation, one significant bottleneck has remained stubbornly manual: the creation of professional, publication-ready illustrations. For anyone who has spent hours wrestling with LaTeX, TikZ, or Figma to produce a single methodology diagram, the struggle is well-known. These visuals are critical for human understanding, yet they require a unique blend of technical accuracy and aesthetic intuition.

To bridge this gap, Google Cloud AI Research, in collaboration with researchers from Peking University, has introduced PaperBanana. This innovative agentic framework is designed to automate the generation of high-quality methodology diagrams and statistical plots, ensuring they meet the rigorous standards of top-tier conferences like NeurIPS and ICML.

How PaperBanana Automates Scientific Illustration

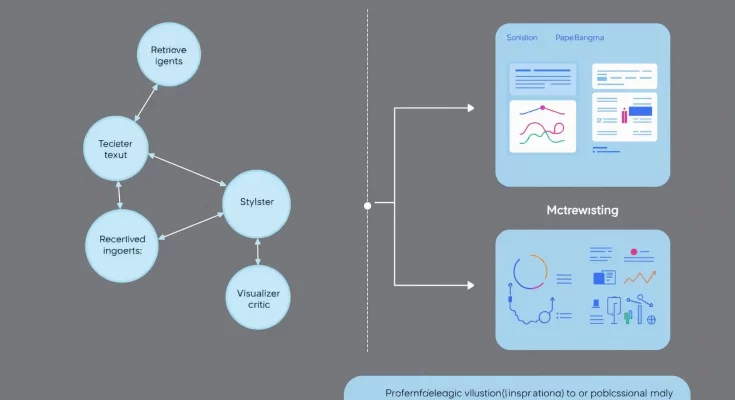

PaperBanana is not a simple generative model. Instead, it is a sophisticated, multi-agent system where specialized components collaborate to transform a methodology description into a visual masterpiece. By employing an “agentic” workflow, the system can reason about the content, style, and accuracy of an illustration in a way that single-prompt systems cannot.

The Five Core Agents of the Framework

The success of PaperBanana lies in its division of labor. The framework orchestrates five distinct agents, each playing a critical role in the creative pipeline:

- The Retriever Agent: Before drawing a single line, this agent scans databases of professional diagrams from top-tier academic papers. By retrieving relevant references, it establishes a high-level template for structure and layout.

- The Planner Agent: This agent takes the researcher’s raw methodology text and translates it into a detailed visual plan. It identifies the key components of the research architecture and determines how they should be logically connected.

- The Stylist Agent: Academic aesthetics are subtle but distinct. This agent applies learned guidelines regarding color palettes, font choices, and shape consistency to ensure the final output looks like it belongs in a professional journal.

- The Visualizer Agent: Depending on the task, this agent either uses vision-language models (VLMs) to render complex diagrams or writes executable Python (Matplotlib) code to generate numerically precise statistical plots.

- The Critic Agent: Perhaps the most important part of the loop, the Critic Agent inspects the draft against the original text. It identifies factual errors, visual clutter, or style inconsistencies, triggering iterative refinements until the output is perfected.

Methodology Diagrams vs. Statistical Plots

PaperBanana addresses two fundamentally different types of scientific visualization. Methodology diagrams require high-level abstraction and artistic layout, while statistical plots demand absolute numerical precision. This dual capability makes it a versatile tool for the modern AI workforce.

For methodology figures, PaperBanana leverages specialized image models like Nano-Banana-Pro. These models are trained to handle the specific “language” of research diagrams—such as neural network layers, data flow arrows, and modular components. For statistical data, the system bypasses direct image generation in favor of code execution. By generating and running Python code, PaperBanana ensures that every data point on a graph is exactly where it should be, avoiding the “hallucinations” often seen in standard generative AI.

Benchmarking Against Human Experts

To evaluate the efficacy of the framework, the research team introduced PaperBananaBench. This benchmark consists of nearly 300 test cases curated from recent NeurIPS publications. It covers a vast range of research domains, from computer vision to reinforcement learning, and tests the framework’s ability to replicate various illustration styles.

The results were striking. In human-subject studies, diagrams produced by PaperBanana were preferred over those created by human experts—including PhD students from prestigious universities—nearly 75% of the time. The framework consistently scored higher in categories such as faithfulness to the text, conciseness, readability, and overall aesthetic appeal. This suggests that the system can actually improve scientific communication by making complex ideas more accessible through superior visual design.

Integration with the Modern AI Research Stack

PaperBanana represents a major step toward the concept of the “Autonomous AI Scientist.” As we see the rise of tools like OpenAI Prism, which provides AI-native workspaces for scientific writing, the addition of automated visualization completes the circle. Researchers can now move from a raw idea to a fully illustrated, formatted, and statistically verified paper with minimal manual intervention.

Furthermore, the framework is designed to be accessible. By reducing the “illustration tax”—the dozens of hours researchers spend on figures—it allows scientists to focus more on core discovery and less on the minutiae of graphic design. This is particularly beneficial for researchers who may lack formal design training or those working in non-visual disciplines who still need to communicate their findings effectively.

The Future of Agentic Visual Reasoning

The implications of PaperBanana extend beyond just academic papers. The ability of an AI system to understand complex technical concepts and re-render them as structured, aesthetic visuals has broad applications in technical documentation, educational material, and industrial reporting. As Google Cloud AI continues to refine these agentic workflows, we can expect to see similar systems applied to everything from architectural blueprints to software flowcharts.

However, the researchers also emphasize the importance of the human-in-the-loop. While the “Critic Agent” handles the bulk of the refinement, the final approval still rests with the scientist. This collaborative approach ensures that while the labor is automated, the scientific integrity and intent remain firmly under human control.

Conclusion

PaperBanana is more than just a clever name; it is a powerful demonstration of how specialized AI agents can tackle niche, high-value problems in the scientific community. By automating the visual side of research, Google AI is helping to accelerate the pace of scientific communication. As the framework evolves and the underlying models become even more sophisticated, the days of struggling with manual diagram tools may soon be a memory of the past. For the global research community, the fruit of this labor is a more efficient, visually compelling future for science.

For more information on the technical specifics of the framework, you can review the full research paper on arXiv:2601.23265.