Introduction to Anthropic’s Approach

In an exciting turn of events in the realm of artificial intelligence, Anthropic has taken a unique approach to benchmark its latest AI model, Claude 3.7 Sonnet, using the classic Game Boy game, Pokémon Red. This choice might seem whimsical, but it opens the door to interesting developments in AI testing and capabilities.

The Benchmarking Process

In a blog post published on Monday, Anthropic revealed how Claude 3.7 was equipped to engage with the game. The model was programmed with key functionalities that let it:

- Store basic memory

- Process input from screen pixels

- Execute function calls to navigate the game

This setup allowed Claude 3.7 Sonnet to play Pokémon continuously, simulating human-like interaction with the gaming interface.

Extended Thinking: A Game Changer

One of the standout features of Claude 3.7 Sonnet is its ability to utilize what Anthropic calls “extended thinking.” This means that the AI can:

- Take additional time to analyze challenging issues

- Apply sophisticated reasoning capabilities to overcome hurdles

This feature positions Claude 3.7 Sonnet alongside other notable models such as OpenAI’s o3-mini and DeepSeek’s R1, showcasing an evolution in the way AI systems process information.

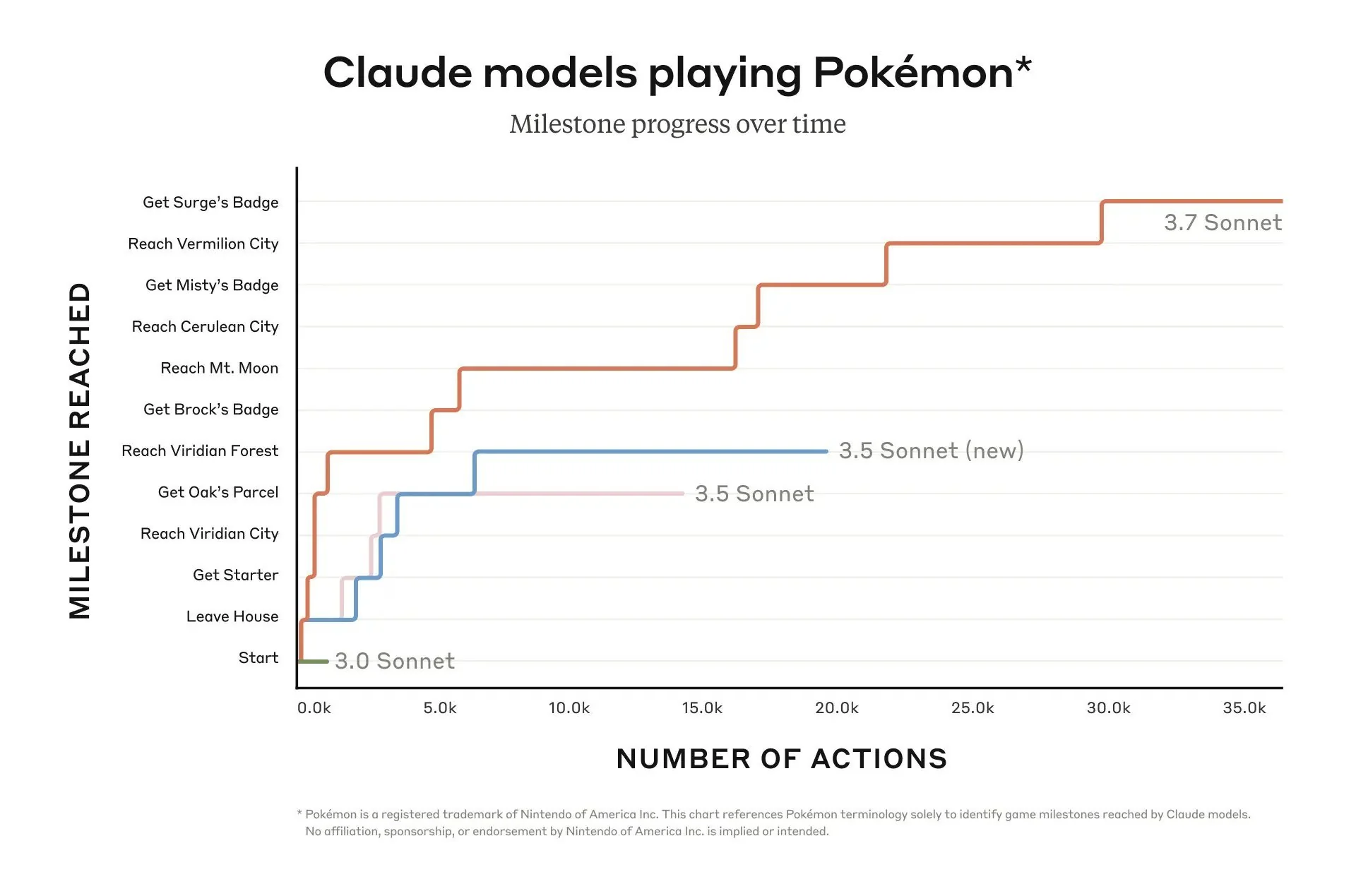

Claude 3.7’s Progress in Pokémon Red

In its initial attempts, Claude 3.0 Sonnet struggled to progress in the game, remaining stuck in the starting town of Pallet. However, the upgraded Claude 3.7 Sonnet achieved impressive milestones, culminating in successful battles against three Pokémon gym leaders and earning their badges.

Performance Metrics

While specific details regarding the computational power and time taken for Claude 3.7 to complete these tasks have not been disclosed, we do know that it executed:

- 35,000 actions to challenge the final gym leader, Surge

This performance indicates the potential of utilizing AI in gaming environments as a form of benchmarking, although the extent of its effectiveness remains to be fully understood.

Historical Context of AI Benchmarking in Gaming

The use of video games as benchmarking tools is not entirely new. Historically, several games have served as platforms for AI testing. Recent months have seen the emergence of numerous applications aimed at evaluating AI’s gaming prowess across various titles, including:

- Street Fighter

- Pictionary

- Chess

Apart from Pokémon, these titles have proven advantageous for examining AI capabilities in real-time interactions and strategic thinking.

The Broader Impact of AI on Startups and Gaming

The intersection of AI, gaming, and startups reflects a growing trend where technology and creativity converge. Companies like Anthropic epitomize this trend, showing how innovative approaches can define the evolution of AI systems.

Future Implications for Developers

As developers continue to explore the capabilities of AI models like Claude 3.7 Sonnet, we can expect:

- Breakthroughs in game testing methodologies

- Novel applications of AI in gaming and real-world scenarios

- Increased interest in AI development from both startups and established firms

Conclusion

Anthropic’s experiment with Pokémon Red not only highlights the playful side of AI development but also sets a precedent for future benchmarking practices within the industry. As we witness advancements like Claude 3.7 Sonnet, the boundaries of what AI can achieve, particularly in interactive environments, continue to expand. This landmark event serves as a reminder that the convergence of technology and creativity remains at the forefront of the AI narrative.